AI Tic Tac Toe

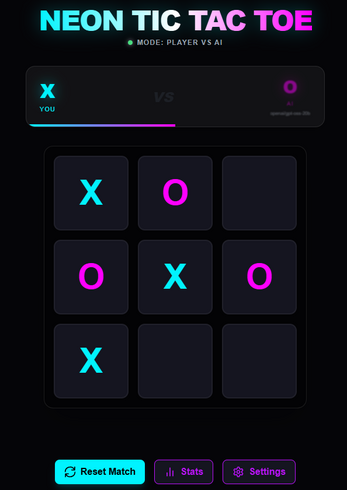

🧠 Neon AI Tic-Tac-Toe: The LLM Benchmark Arena

Can a Large Language Model actually understand game logic, or is it just hallucinating moves?

This project started as an experiment to answer that question. I wanted to see how different AI models—from Google's Gemini to local models like Qwen and TinyLlama—perform when asked to play a game with strict rules and spatial reasoning.

This app is a battleground and benchmark tool for Artificial Intelligence.

🎮 Play Your Way

🌐 Play in Browser (Web Version)

Check out the lightweight web version instantly! Perfect for testing Cloud AIs (like Gemini) or playing Human vs. AI.

Note: Advanced local model features are limited in the web version.

🖥️ Download for Desktop

For the full experience, download the Windows/Mac/Linux version below. The desktop app unlocks:

- Built-in Local Models: Download and run models (like Qwen 0.5B or TinyLlama) directly inside the app—no internet required!

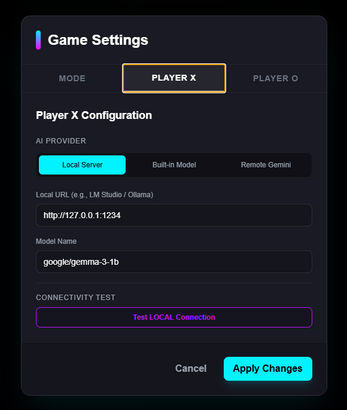

- Local Server Support: Connect to Ollama or LM Studio running on your machine to test massive models like Llama 3, Mistral, Gpt (This can also work from the browser version)

- Persistent Stats: Save your benchmark history to your disk.

🧪 Features

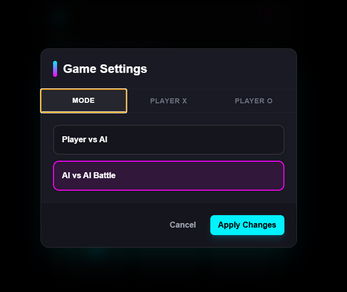

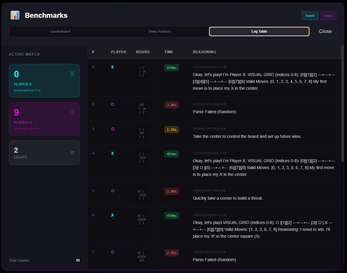

🤖 AI vs. AI Gauntlet

The core of this software. Set up two different AI models and watch them battle it out.

- Auto-Run: Run 10 or 50 games in "Headless Mode" (super fast) to gather data.

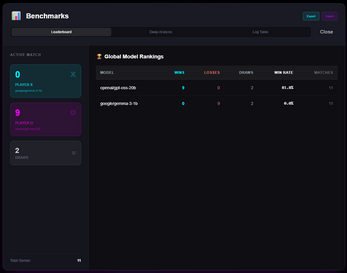

- Leaderboards: Track which models have the highest win rates.

- Benchmarking: See which models understand strategy vs. which ones just make random valid moves.

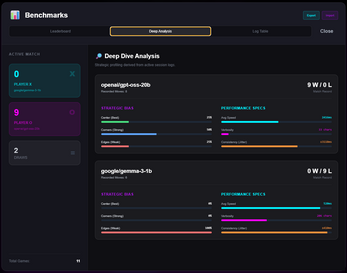

📊 Deep Dive Analytics

We don't just count wins. The Stats Dashboard analyzes the "Cognition" of the models:

- >span class="ng-star-inserted"> Does the model write a paragraph just to pick a move? (Verbosity tracking).

- Speed: Average milliseconds per move.

- Strategic Bias: Does the model prefer the Center (Optimal) or does it get stuck on Edges?

- Hallucination Rate: How often does the model try to make an illegal move?

🛠️ Open Source & Hackable

This project is fully Open Source!

- View the Code: Check it out on GitHub.

- Tech Stack: Built with React, Electron, Vite, and Transformers.js.

🧐 The Experiment

I built this to test the limits of Small Language Models (SLMs). While massive models like GPT-4 can play Chess, can a tiny 0.5 Billion parameter model running on a laptop CPU understand the concept of "blocking" an opponent?

Download now and find out which AI is the true logic champion!

I also have similar vs llm type games Connect 4, Guess Who, Pool, Poker, Sumo Backgammon, Battleships, Dominoes and Checkers almost ready. Let me know which one you would like to see first.

Download

Click download now to get access to the following files:

Leave a comment

Log in with itch.io to leave a comment.